- #Trigger airflow dag from python how to

- #Trigger airflow dag from python full

- #Trigger airflow dag from python code

If you're using a DataContextConfig or CheckpointConfig, ensure that the "datasources" field references your backend connection name.

#Trigger airflow dag from python code

First button on the left Let’s search inside the Airflow code how this button works. All that’s needed to get the Operator to point to an external dataset is to set up an Airflow Connection to the Datasource, and adding the connection to your Great Expectations project. First, in Airflow there is a button that can trigger the dag on demand. The GreatExpectationsOperator can run a checkpoint on a dataset stored in any backend that is compatible with Great Expectations.

#Trigger airflow dag from python full

A checkpoint_config can be passed to the operator in place of a name, and is defined like this example.įor a full list of parameters, see GreatExpectationsOperator. With a checkpoint_name, checkpoint_kwargs can be passed to the operator to specify additional, overwriting configurations. I found a way in the documentation to triggering it from a cloud function. See this example.Ī checkpoint_name references a checkpoint in the project CheckpointStore defined in the DataContext (which is often the great_expectations/checkpoints/ path), so that a checkpoint_name = "" would reference the file great_expectations/checkpoints/taxi/pass/chk.yml. Wilson Lian i would like to trigger my airflow DAG from a python script. If you're using an in-memory data_context_config, a DataContextConfig must be defined. The data_context_root_dir should point to the great_expectations project directory that was generated when you created the project. The operator has several optional parameters, but it always requires a data_context_root_dir or a data_context_config and a checkpoint_name or checkpoint_config. To use Great Expectations with Airflow within Astronomer, see Orchestrate Great Expectations with Airflow. This guide focuses on using Great Expectations with Airflow in a self-hosted environment. Checkpoints provide a convenient abstraction for bundling the validation of a Batch (or Batches) of data against an Expectation Suite (or several), as well as the actions that should be taken after the validation. The idea is that each task should trigger an external dag.

It organizes storage and access for Expectation Suites, Datasources, notification settings, and data fixtures. A Data Context represents a Great Expectations project.

#Trigger airflow dag from python how to

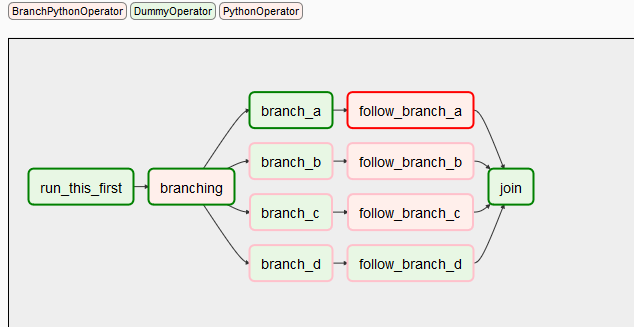

This document explains how to use the GreatExpectationsOperator to perform data quality work in an Airflow DAG.īefore you create your DAG, make sure you have a Data Context and Checkpoint configured. DAGs complete work through operators, which are templates that encapsulate a specific type of work.

Learn how to run a Great Expectations checkpoint in Apache Airflow, and how to use an Expectation Suite within an Airflow directed acyclic graphs (DAG) to trigger a data asset validation.Īirflow is a data orchestration tool for creating and maintaining data pipelines through DAGs written in Python. How to Use Great Expectations with Airflow

0 kommentar(er)

0 kommentar(er)